Anatomy of an Audio Effect Plugin

In this post I will be diving into the separate components that make up the anatomy of an audio plugin. This information was gathered again from the book Designing Audio Effect Plugins in C++ For AAX, AU, and VST3 with DSP Theory by Will C. Pirkle (ISBN 13: 978-1138591936). Using this information, as well as Will's Youtube videos, I was able to create a basic volume plugin. The source code for that plugin can be found on my Github repo: https://github.com/wisjhn1721/HelloVolume

See the README.md file for the links to Will's videos on Youtube in case you're interested in creating your own plugin from scratch as I have done here. Below you'll find my notes from Chapter 2 - Anatomy of an Audio Plugin from Will's book. I hope you enjoy!

2 Anatomy of an Audio Plugin

"The first thing to understand about the audio plugin specifications for AAX, AU, and VST3 is this: they are all fundamentally the same and they all implement the same sets of attributes and behaviors tailored specifically to the problem of packaging audio signal processing software in a pseudo-generic way. Plugins are encapsulated in C++ objects, derived from a specified base class, or set of base classes, that the manufacturer provides. The plugin host instantiates the plugin object and receives a base class pointer to the newly created object. The host may call only those functions that it has defined in the API, and the plugin is required to implement those functions correctly in order to be considered a proper plugin to the DAW—if required functions are missing or implemented incorrectly, the plugin will fail. This forms a contract between the manufacturer and the plugin developer. … The plugin of course is our little signal processing gem that is going to do something interesting with the audio, packaged as C++ objects, and the host is the DAW or other software that loads the plugin, obtains a single pointer to its fundamental base class, and interacts with it. " (2019, pg. 15)

2.1 Plugin Packaging: Dynamic-Link Libraries (DLLs)

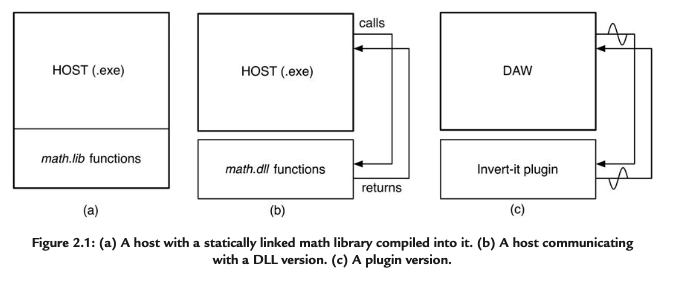

All plugins are packaged as dynamic-link libraries (DLLs), sometimes see these called dynamic linked or dynamically linked. DLLs are precompiled library that the executable links with at runtime rather than at compile time. The executable is the DAW and the precompiled library is the DLL, which is your plugin.

When you #include a library in C++ you are statically linking to that specified library That library contains a precompiled set of functions. Static linking is also called implicit linking. When the compiler comes across a function belonging to this library, it replaces the function call with the precompiled code from the library. In this way, the extra code is compiled into your executable.

Dynamic linking, or explicit linking, occurs when linking to the functions happens at runtime. This means that these precompiled functions will exist in a separate file that our executable (DAW) will know about and communicate with but only after it starts running. One advantage is that the way this system is set up—a host that connects to a component at runtime—works perfectly as a way to extend the functionality of a host without the host knowing anything about the component when the host is compiled. It also makes an ideal way to set up a plugin processing system.

Validation Phase

"When the host is first started, it usually goes through a process of trying to load all of the plugin DLLs it can find in the folder or folders that you specify (Windows) or in well defined API-specific folders (MacOS). This initial phase is done to test each plugin to ensure compatibility if the user decides to load it. This includes retrieving information such as the plugin’s name, which the host will need to populate its own plugin menus or screens, and may also include basic or even rigorous testing of the plugin. We will call this the validation phase." ( 2019, pg. 17)

Loading Phase

"Usually, the host unloads all of the plugins after the validation phase and then waits for the user to load the plugin onto an active track during a session. We will call this the loading phase. The validation phase already did at least part (if not all) of this once already; if you have bugs during validation, they are often caused during the loading operation. When your plugin is loaded, the DLL is pulled into the executable’s process address space, so that the host may retrieve a pointer from it that has an address within the DAW’s own address space. … The loading phase is where most of the plugin description occurs. The description is the first aspect of plugin design you need to understand, and it is mostly fairly simple stuff." ( 2019, pg. 17)

Processing Phase

"After the loading phase is complete, the plugin enters the processing phase, which is often implemented as an infinite loop on the host. During this phase, the host sends audio to your plugin, your cool DSP algorithm processes it, and then your plugin sends the altered data back to the host. This is where most of your mathematical work happens, and this is the focus of the book."( 2019, pg. 17)

Unloading Phase

"Eventually, your plugin will be terminated either because the user unloads it or because the DAW or its session is closed. During this unloading phase, your plugin will need to destroy any allocated resources and free up memory." ( 2019, pg. 17)

2.2 The Plugin Description

Each API specifies a slightly different mechanism for the plugin to let the host know information about it.

These include the most basic types of information:

- plugin name

- plugin short name (AAX only)

- plugin type: synth or FX, and variations within these depending on API

- plugin developer’s company name (vendor)

- vendor email address and website URL

There are some four-character codes that need to be set for AU, AAX, and VST3.

- product code: must be unique for each plugin your company sells

- vendor code: for our company it is “WILL”

2.2.1 The Plugin Description: Features and Options

In addition to these simple strings and flags, there are some more complicated things that the plugin needs to define for the host at load-time. These are listed here and described in the chapters ahead.

- plugin wants a side-chain input

- plugin creates a latency (delay) in the signal processing and needs to inform the host

- plugin creates a reverb or delay “tail” after playback is stopped and needs to inform the host that it wants to include this reverb tail; the plugin sets the tail time for the host

- plugin has a custom GUI that it wants to display for the user

- plugin wants to show a Pro Tools gain reduction meter (AAX)

- plugin factory presets

2.3 Initialization: Defining the Plugin Parameter Interface

Almost all plugins we design today require some kind of GUI (also called the UI). The user interacts with the GUI to change the functionality of the plugin. Each control on the GUI is connected to a plugin parameter. *** The plugin must declare and describe the parameters to the host during the plugin-loading phase. ***

There are three fundamental reasons that these parameters need to be exposed:

- If the user creates a DAW session that includes parameter automation, then the host is going to need to know how to alter these parameters on the plugin object during playback.

- All APIs allow the plugin to declare “I don’t have a GUI” and will instead provide a simple GUI for the user. The appearance of this default UI is left to the DAW programmers; usually it is very plain and sparse, just a bunch of sliders or knobs.

- When the user saves a DAW session, the parameter states are saved; likewise when the user loads a session, the parameter states need to be recalled.

There are two distinct types of parameters: continuous and string-list.

- Continuous parameters usually connect to knobs or sliders that can transmit continuous values. This includes float, double, and int data types.

- String-list parameters present the user with a list of strings to choose from, and the GUI controls may be simple (a drop-down list box) or complex (a rendering of switches with graphic symbols). The list of strings is sometimes provided in a comma-separated list, and other times packed into arrays of string objects, depending on the API.

The parameter attributes include:

- parameter name (“Volume”)

- parameter units (“dB”)

- whether the parameter is a numerical value, or a list of string values and if it is a list of string values, the strings themselves

- parameter minimum and maximum limits

- parameter default value for a newly created plugin

- control taper information (linear, logarithmic, etc.)

- other API-specific information

- auxiliary information

In all of the APIs, you create an individual C++ object for each parameter your plugin wants to expose.

2.3.1 Initialization: Defining Channel I/O Support

An audio plugin is designed to work with some combination of input and output channels. The most basic channel support is for three common setups: mono in/mono out, mono in/stereo out, and stereo in/ stereo out.

2.3.2 Initialization: Sample Rate Dependency

Almost all of the plugin algorithms we study are sensitive to the current DAW sample rate, and its value is usually part of a set of equations we use to process the audio signal. In all APIs, there is at least one mechanism that allows the plugin to obtain the current sample rate for use in its calculations. In all cases, the plugin retrieves the sample rate from a function call or an API member variable.

2.4 Processing: Preparing for Audio Streaming

The plugin must prepare itself for each audio streaming session. This usually involves resetting the algorithm, clearing old data out of memory structures, resetting buffer indexes and pointers, and preparing the algorithm for a fresh batch of audio. Each API includes a function that is called prior to audio streaming, and this is where you will perform these operations.

2.4.1 Processing: Audio Signal Processing (DSP)

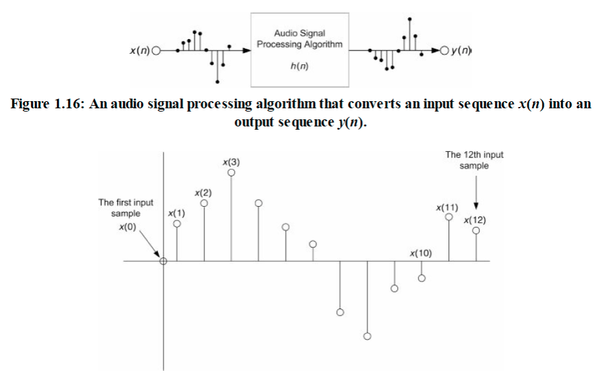

These days, and across all APIs, the audio input and output samples are formatted as floating point data on the range of [−1.0, +1.0]. We will perform floating point math and we will encode all of our C++ objects to operate internally with double data types.

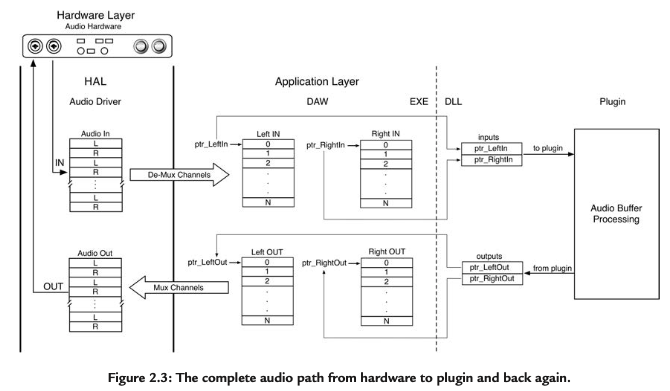

"Figure 2.3 [shows] the Hardware Layer with the physical audio adaptor. It is connected to the audio driver via the hardware bus. The driver resides in the Hardware Abstraction Layer (HAL). Regardless of how the audio arrives from the adaptor, the driver formats it according to its OS-defined specification and that is usually in an interleaved format with one sample from each channel in succession. For stereo data, the first sample is from the left channel and then it alternates as left, right, left, right, etc. The driver delivers the interleaved data to the application in chunks called buffers."

"The DAW then takes this data and de-interleaves it, placing each channel in its own buffer called the input channel buffer. The DAW also prepares (or reserves) buffers that the plugin will write the processed audio data into called the output channel buffers. The DAW then delivers the audio data to the plugin by sending it a pointer to each of the buffers. The DAW also sends the lengths of the buffers along with channel count information. … When the plugin returns the processed audio by returning the pointers to the output buffers, the DAW then re-interleaves the data and ships it off to the audio driver, or alternatively writes it into an audio file." ( 2019, pg. 21-22)

We can process each buffer of data independently, which we call buffer processing, or we can process one sample from each buffer at a time, called frame processing. Buffer processing is fine for multi-channel plugins whose channels may be processed independently—for example, an equalizer. But many algorithms require that all of the information to each channel is known at the time when each channel is processed. Since frame processing is a subset of buffer processing and is the most generic, we will be doing all of the book processing in frames.

2.5 Mixing Parameter Changes With Audio Processing

There are two distinct activities happening during our plugin operation.

1) the DAW is sending audio data to the plugin for processing

2) the user is interacting with the GUI—or running automation, or doing both

This requires the plugin to periodically re-configure its internal operation and algorithm parameters. All of these things come together during the buffer processing cycle.

- Pre-processing: transfer GUI/automation parameter changes into the plugin and update the plugin’s internal variables as needed.

- Processing: perform the DSP operations on the audio using the newly adjusted internal variables.

- Post-processing: send parameter information back to the GUI; for plugins that support MIDI

output, this is where they would generate these MIDI messages as well.

2.5.1 Plugin Variables and Plugin Parameters

The parameter object stores this value in an internal variable. However, the parameter’s variable is not what the plugin will use during processing, and in some cases (AU), the plugin can never really access that variable directly. Instead, the plugin defines its own set

of internal variables that it uses during the buffer processing cycle, and copies information from the parameters into them.

- Each GUI control or plugin parameter (inbound or outbound) will ultimately connect to a member variable on the plugin object.

- During the pre-processing phase, the plugin transfers information from each of the inbound parameters into each of its corresponding member variables; if the plugin needs to further process the parameter values (e.g. convert dB to a scalar multiplier) then that is done here as well.

- During the processing phase, the DSP algorithm uses the plugin’s internal variables for calculations; for output parameters like metering data, the plugin assembles that during this phase as well.

- During the post-processing phase, the plugin transfers output information from its internal variables into the associated outbound parameters.

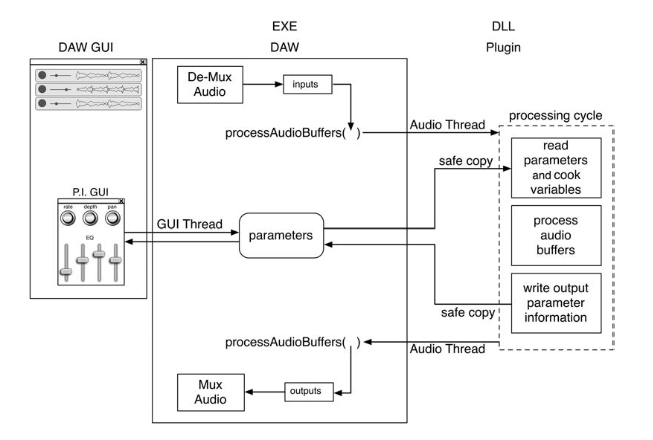

The figure below shows how the DAW and plugin are connected. The audio is delivered to the plugin with

a buffer processing function call (processAudioBuffers). During the buffer processing cycle, input parameters are read, audio processing happens, and then output parameters are written. The outbound audio is sent to the speakers or an audio file and the outbound parameters are sent to the GUI. ( 2019, pg. 24)

For almost all of our plugin parameters, we will need to cook the data coming from the GUI before using it in our calculations. We will adhere to the following guidelines:

- Each inbound plugin parameter value will be transferred into an internal plugin member variable that corresponds directly to its raw data; e.g. a dB-based parameter value is transferred into a dB-based member variable.

- If the plugin requires cooking the data, it may do so but it will need to create a second variable to store the cooked version.

- Each outbound plugin parameter will likewise be connected to an internal variable.

- All VU meter data must be on the range of 0.0 (meter off ) to 1.0 (meter fully on) so any conversion

to that range must be done before transferring the plugin variable to the outbound parameter.

2.5.2 Parameter Smoothing

If the user makes drastic adjustments on the GUI control, then the parameter changes arriving at the plugin may be coarsely quantized, which can cause clicks while the GUI control is being altered (which is sometimes called zipper noise). To alleviate this issue, we may employ parameter smoothing. With parameter smoothing, we use interpolation to gradually adjust parameter changes so that they do not jump around so quickly.

2.5.5 Multi-Threaded Software

The two things that need to appear to happen at once—the audio processing and the user interaction with the GUI—are actually happening on two different threads that are being time-shared with the CPU. This means that our plugin is necessarily a multi-threaded piece of software.

The fact that the two threads running asynchronously need to access the same information is at the core of the multi- threaded software problem. When two threads compete to write data to the same variable, they are said to be in a race condition: each is racing against the other to write the data and in the end the data may still be corrupted. Race conditions and inconsistent data are our fundamental problems here.